Research

Research

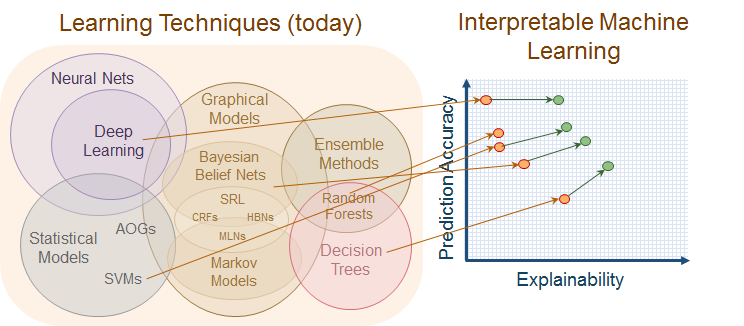

- Interpretable Machine Learning

Machine learning techniques such as deep neural networks have become an indispensable tool on an increasing number of complex tasks due to the availability of large databases and recent improvements in deep learning methodology. However, these models are usually applied in a black box manner because of their nested non-linear structure, which limits its applications in numerous scenarios. Therefore, it is imperative to develop methods for explaining and interpreting the current machine learning models. Currently, we intend to carry out a systematic investigation of how to build an interpretable artificial intelligence framework by bridging the gap between the abstract latent features and human concepts, with a set of sound theoretical analysis and extensions in various application.